A guide to creating a chatbot with Rasa stack and Python.

대화형 AI 시스템은 인간생태계의 필수불가결한 요소가 되었다. 이미 잘 알려져있듯이 Apple의 Siri, Amazon의 Alexa and Microsoft의 Cortana 등이 있다. r머신 러닝 기반의 AI 어시스턴트인 Rasa를 만들어보자.

Objective

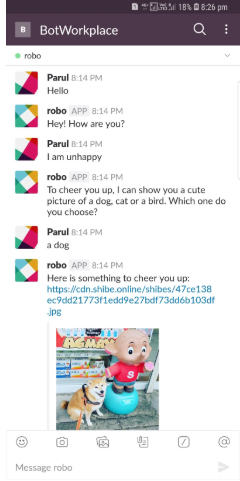

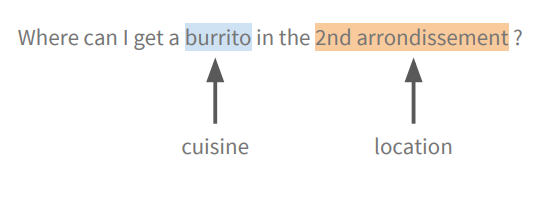

본 포스팅에서는 사용자의 기분을 체크해서 힘을 북돋우는 적절한 액션을 취하는 ‘Zoe’ 라고 불리는 챗봇을 만들어 본다. 그리고 다음 포스팅에서는 이를 Slack에 배포해본다. 다음 스크린샷과 같이 구현해볼 것이다.

https://cdn-images-1.medium.com/max/800/1*YCBEujcXGf4MFOT02my5hQ.png

Requirements

이를 구현하기위해 Rasa Stack 과 언어모델을 설치해야한다. 언어모델은 입력되는 텍스트 메시지에서 필요한 정보를 뽑아내기 위해 사용된다. SpaCy 언어모델을 사용할 것이다.

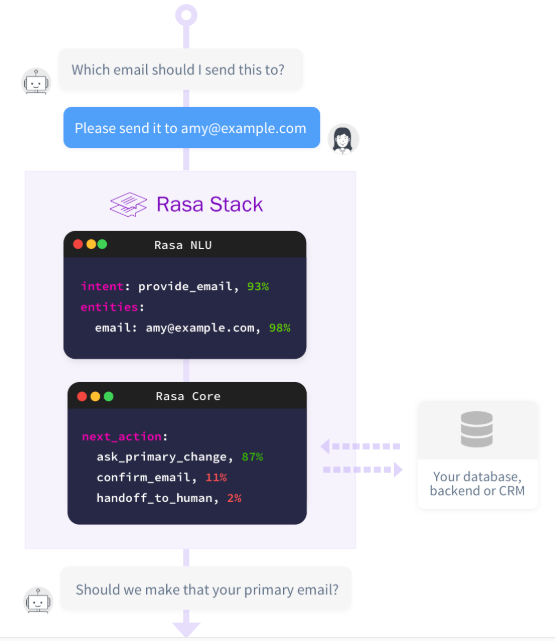

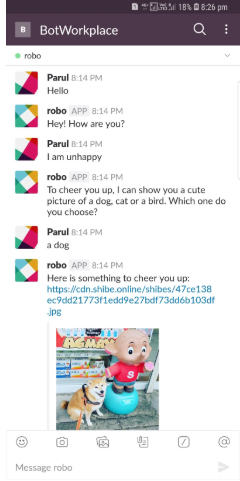

Rasa Stack

Rasa Stack 은 대화 AI를 위한 오픈소스 머신러닝툴이다. 봇은 샘플 대화 기반으로 훈련된 머신러닝모델에 기반한다. 다음과 같이 2개의 프레임워크로 구성된다.

<source> https://cdn-images-1.medium.com/max/800/1*4Wu5EUB9yJ2AUfoPGMSLXw.png

Rasa NLU 의도분류와 엔티티 추출를 가진 자연어 이해를 위한 라이브러리로 챗봇이 사용자가 어떤 말을 하고 있는지 이해하는데 사용된다.

Rasa Core 머신러닝 기반의 대화 관리 챗봇 프레임워크로 NLU의 인풋, 대화 히스토리, 그리고 훈련 데이터에 기반하여 최선의 다음 액션을 예측하는데 사용된다.

Rasa 와 관련된 내용은 다음 공식문서에서 자세히 살펴볼 수 있다.

Installations

주피터 노트북을 이용하여 이하 코드 실행을 진행할 것이다. 이에 앞서 기존에 관련된 라이브러리를 설치한 적이 있다면 해당되는 라이브러리를 완전히 제거하고 새롭게 진행하도록 하자. 본 예제에서는 주요하게 3개의 라이브러리가 사용되는데, 버전이 맞지 않는 경우 제대로 예제 실행을 할 수가 없다. 예를 들어 다음과 같이 말이다. 따라서 반드시 3개의 라이브러리를 제거하고 다시 처음부터 진행하기를 권한다.

import sys

python = sys.executable

# In your environment run:

!{python} -m pip install -U rasa_core==0.9.6 rasa_nlu[spacy];

rasa-core 0.9.6 has requirement rasa-nlu~=0.12.0, but you'll have rasa-nlu 0.14.3 which is incompatible.

rasa-nlu 0.14.3 has requirement scikit-learn=0.20.2, but you'll have scikit-learn 0.19.2 which is incompatible.

1) 기존 설치된 라이브러리 제거

rasa_core 제거

(tfKeras) founder@hilbert:~/rasa$ pip show rasa_core

Name: rasa-core

Version: 0.13.2

Summary: Machine learning based dialogue engine for conversational software.

Home-page: https://rasa.com

Author: Rasa Technologies GmbH

Author-email: hi@rasa.com

License: Apache 2.0

Location: /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages

Requires: flask-jwt-simple, scikit-learn, typing, redis, webexteamssdk, twilio, flask-cors, tqdm, keras-preprocessing, pytz, jsonpickle, gevent, coloredlogs, keras-applications, numpy, tensorflow, slackclient, python-telegram-bot, apscheduler, pika, terminaltables, colorclass, jsonschema, python-dateutil, rasa-core-sdk, ruamel.yaml, pymongo, fakeredis, pydot, questionary, flask, pykwalify, rocketchat-API, packaging, requests, fbmessenger, colorhash, scipy, python-socketio, networkx, rasa-nlu, mattermostwrapper

Required-by:

(tfKeras) founder@hilbert:~/rasa$ pip uninstall rasa_core

Uninstalling rasa-core-0.13.2:

Would remove:

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/examples/*

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/rasa_core-0.13.2.dist-info/*

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/rasa_core/*

Proceed (y/n)? y

Successfully uninstalled rasa-core-0.13.2

(tfKeras) founder@hilbert:~/rasa$ pip show rasa_core

rasa_nlu 제거

(tfKeras) founder@hilbert:~/rasa$ pip show rasa_nlu

Name: rasa-nlu

Version: 0.14.3

Summary: Rasa NLU a natural language parser for bots

Home-page: https://rasa.com

Author: Rasa Technologies GmbH

Author-email: hi@rasa.com

License: Apache 2.0

Location: /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages

Requires: requests, jsonschema, six, boto3, packaging, typing, simplejson, coloredlogs, scikit-learn, matplotlib, tqdm, future, klein, ruamel.yaml, cloudpickle, numpy, gevent

Required-by:

(tfKeras) founder@hilbert:~/rasa$ pip uninstall rasa_nlu

Uninstalling rasa-nlu-0.14.3:

Would remove:

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/rasa_nlu-0.14.3.dist-info/*

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/rasa_nlu/*

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/tests/base/*

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/tests/training/*

Proceed (y/n)? y

Successfully uninstalled rasa-nlu-0.14.3

(tfKeras) founder@hilbert:~/rasa$ pip uninstall rasa_nlu

Skipping rasa-nlu as it is not installed.

(tfKeras) founder@hilbert:~/rasa$ pip show rasa_nlu

spacy 제거

(tfKeras) founder@hilbert:~/rasa$ pip show spacy

Name: spacy

Version: 2.0.18

Summary: Industrial-strength Natural Language Processing (NLP) with Python and Cython

Home-page: https://spacy.io

Author: Explosion AI

Author-email: contact@explosion.ai

License: MIT

Location: /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages

Requires: preshed, ujson, dill, numpy, murmurhash, thinc, plac, requests, regex, cymem

Required-by: en-core-web-md

(tfKeras) founder@hilbert:~/rasa$ pip uninstall spacy

Uninstalling spacy-2.0.18:

Would remove:

/home/founder/anaconda3/envs/tfKeras/bin/spacy

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/spacy-2.0.18.dist-info/*

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/spacy/*

Proceed (y/n)? y

Successfully uninstalled spacy-2.0.18

(tfKeras) founder@hilbert:~/rasa$ pip show spacy

(tfKeras) founder@hilbert:~/rasa$

그리고 노트북 파일과 체크포인트 디렉토리만 남겨두고, rasa 디렉토리 아래 파일 및 하위 디렉토리 삭제한다.

2) Starting Jupyter Notebook with necessary imports

그러면 주피터 노트북을 실행하여 필수 라이브러리를 임포트하자.

%matplotlib inline

import logging, io, json, warnings

logging.basicConfig(level="INFO")

warnings.filterwarnings('ignore')

3) Primary Installations

Rasa NLU, Rasa Core, spaCy 언어모델이 필요하다. 설치해보자. 본 튜토리얼에서는 사용하고 있는 Rasa Core 는 최신 버전이 아니다. 최신 버전 사용하여 본 튜토리얼을 진행할 경우 에러를 발생시킬 수 있으므로 반드시 위와 같이 버전을 지정하여 설치하도록 한다.

import sys

python = sys.executable

# In your environment run:

!{python} -m pip install -U rasa_core==0.9.6 rasa_nlu[spacy]==0.12.0;

Collecting rasa_core==0.9.6

Collecting rasa_nlu[spacy]==0.12.0

Requirement already satisfied, skipping upgrade: six~=1.0 in /home/founder/.local/lib/python3.6/site-packages (from rasa_core==0.9.6) (1.12.0)

Requirement already satisfied, skipping upgrade: flask~=1.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (1.0.2)

Requirement already satisfied, skipping upgrade: h5py~=2.0 in /home/founder/.local/lib/python3.6/site-packages (from rasa_core==0.9.6) (2.8.0)

Requirement already satisfied, skipping upgrade: scikit-learn~=0.19.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (0.19.2)

Requirement already satisfied, skipping upgrade: flask-cors~=3.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (3.0.7)

Requirement already satisfied, skipping upgrade: typing~=3.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (3.6.6)

Requirement already satisfied, skipping upgrade: tensorflow<1.9,>=1.7 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (1.8.0)

Requirement already satisfied, skipping upgrade: future~=0.16 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (0.17.1)

Requirement already satisfied, skipping upgrade: keras~=2.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (2.2.4)

Requirement already satisfied, skipping upgrade: numpy~=1.13 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (1.16.1)

Requirement already satisfied, skipping upgrade: pykwalify<=1.6.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (1.6.0)

Requirement already satisfied, skipping upgrade: ruamel.yaml~=0.15.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (0.15.88)

Requirement already satisfied, skipping upgrade: fakeredis~=0.10.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (0.10.3)

Requirement already satisfied, skipping upgrade: slackclient~=1.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (1.3.0)

Requirement already satisfied, skipping upgrade: fbmessenger~=5.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (5.4.0)

Requirement already satisfied, skipping upgrade: requests~=2.15 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (2.21.0)

Requirement already satisfied, skipping upgrade: apscheduler~=3.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (3.5.3)

Requirement already satisfied, skipping upgrade: networkx~=2.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (2.2)

Requirement already satisfied, skipping upgrade: graphviz~=0.8.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (0.8.4)

Requirement already satisfied, skipping upgrade: ConfigArgParse~=0.13.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (0.13.0)

Requirement already satisfied, skipping upgrade: twilio~=6.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (6.24.1)

Requirement already satisfied, skipping upgrade: coloredlogs~=10.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (10.0)

Requirement already satisfied, skipping upgrade: python-telegram-bot~=10.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (10.1.0)

Requirement already satisfied, skipping upgrade: tqdm~=4.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (4.31.1)

Requirement already satisfied, skipping upgrade: jsonpickle~=0.9.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (0.9.6)

Requirement already satisfied, skipping upgrade: colorhash~=1.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (1.0.2)

Requirement already satisfied, skipping upgrade: mattermostwrapper~=2.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (2.1)

Requirement already satisfied, skipping upgrade: redis~=2.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_core==0.9.6) (2.10.6)

Requirement already satisfied, skipping upgrade: pathlib in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (1.0.1)

Requirement already satisfied, skipping upgrade: matplotlib in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (2.2.3)

Requirement already satisfied, skipping upgrade: packaging in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (18.0)

Requirement already satisfied, skipping upgrade: cloudpickle in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (0.6.1)

Requirement already satisfied, skipping upgrade: pyyaml in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (3.13)

Requirement already satisfied, skipping upgrade: simplejson in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (3.16.0)

Requirement already satisfied, skipping upgrade: gevent in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (1.4.0)

Requirement already satisfied, skipping upgrade: klein in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (17.10.0)

Requirement already satisfied, skipping upgrade: boto3 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (1.9.82)

Requirement already satisfied, skipping upgrade: jsonschema in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (2.6.0)

Collecting spacy>2.0; extra == "spacy" (from rasa_nlu[spacy]==0.12.0)

Requirement already satisfied, skipping upgrade: scipy; extra == "spacy" in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (1.2.1)

Requirement already satisfied, skipping upgrade: sklearn-crfsuite; extra == "spacy" in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from rasa_nlu[spacy]==0.12.0) (0.3.6)

Requirement already satisfied, skipping upgrade: Werkzeug>=0.14 in /home/founder/.local/lib/python3.6/site-packages (from flask~=1.0->rasa_core==0.9.6) (0.14.1)

Requirement already satisfied, skipping upgrade: Jinja2>=2.10 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from flask~=1.0->rasa_core==0.9.6) (2.10)

Requirement already satisfied, skipping upgrade: click>=5.1 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from flask~=1.0->rasa_core==0.9.6) (7.0)

Requirement already satisfied, skipping upgrade: itsdangerous>=0.24 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from flask~=1.0->rasa_core==0.9.6) (1.1.0)

Requirement already satisfied, skipping upgrade: grpcio>=1.8.6 in /home/founder/.local/lib/python3.6/site-packages (from tensorflow<1.9,>=1.7->rasa_core==0.9.6) (1.17.1)

Requirement already satisfied, skipping upgrade: tensorboard<1.9.0,>=1.8.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from tensorflow<1.9,>=1.7->rasa_core==0.9.6) (1.8.0)

Requirement already satisfied, skipping upgrade: absl-py>=0.1.6 in /home/founder/.local/lib/python3.6/site-packages (from tensorflow<1.9,>=1.7->rasa_core==0.9.6) (0.6.1)

Requirement already satisfied, skipping upgrade: protobuf>=3.4.0 in /home/founder/.local/lib/python3.6/site-packages (from tensorflow<1.9,>=1.7->rasa_core==0.9.6) (3.6.1)

Requirement already satisfied, skipping upgrade: wheel>=0.26 in /home/founder/.local/lib/python3.6/site-packages (from tensorflow<1.9,>=1.7->rasa_core==0.9.6) (0.32.3)

Requirement already satisfied, skipping upgrade: termcolor>=1.1.0 in /home/founder/.local/lib/python3.6/site-packages (from tensorflow<1.9,>=1.7->rasa_core==0.9.6) (1.1.0)

Requirement already satisfied, skipping upgrade: astor>=0.6.0 in /home/founder/.local/lib/python3.6/site-packages (from tensorflow<1.9,>=1.7->rasa_core==0.9.6) (0.7.1)

Requirement already satisfied, skipping upgrade: gast>=0.2.0 in /home/founder/.local/lib/python3.6/site-packages (from tensorflow<1.9,>=1.7->rasa_core==0.9.6) (0.2.0)

Requirement already satisfied, skipping upgrade: keras-applications>=1.0.6 in /home/founder/.local/lib/python3.6/site-packages (from keras~=2.0->rasa_core==0.9.6) (1.0.6)

Requirement already satisfied, skipping upgrade: keras-preprocessing>=1.0.5 in /home/founder/.local/lib/python3.6/site-packages (from keras~=2.0->rasa_core==0.9.6) (1.0.5)

Requirement already satisfied, skipping upgrade: docopt>=0.6.2 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from pykwalify<=1.6.0->rasa_core==0.9.6) (0.6.2)

Requirement already satisfied, skipping upgrade: python-dateutil>=2.4.2 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from pykwalify<=1.6.0->rasa_core==0.9.6) (2.7.5)

Requirement already satisfied, skipping upgrade: websocket-client<1.0a0,>=0.35 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from slackclient~=1.0->rasa_core==0.9.6) (0.54.0)

Requirement already satisfied, skipping upgrade: idna<2.9,>=2.5 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from requests~=2.15->rasa_core==0.9.6) (2.8)

Requirement already satisfied, skipping upgrade: chardet<3.1.0,>=3.0.2 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from requests~=2.15->rasa_core==0.9.6) (3.0.4)

Requirement already satisfied, skipping upgrade: urllib3<1.25,>=1.21.1 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from requests~=2.15->rasa_core==0.9.6) (1.24.1)

Requirement already satisfied, skipping upgrade: certifi>=2017.4.17 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from requests~=2.15->rasa_core==0.9.6) (2018.11.29)

Requirement already satisfied, skipping upgrade: tzlocal>=1.2 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from apscheduler~=3.0->rasa_core==0.9.6) (1.5.1)

Requirement already satisfied, skipping upgrade: pytz in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from apscheduler~=3.0->rasa_core==0.9.6) (2018.9)

Requirement already satisfied, skipping upgrade: setuptools>=0.7 in /home/founder/.local/lib/python3.6/site-packages (from apscheduler~=3.0->rasa_core==0.9.6) (40.6.3)

Requirement already satisfied, skipping upgrade: decorator>=4.3.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from networkx~=2.0->rasa_core==0.9.6) (4.3.0)

Requirement already satisfied, skipping upgrade: pysocks; python_version >= "3.0" in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from twilio~=6.0->rasa_core==0.9.6) (1.6.8)

Requirement already satisfied, skipping upgrade: PyJWT>=1.4.2 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from twilio~=6.0->rasa_core==0.9.6) (1.7.1)

Requirement already satisfied, skipping upgrade: humanfriendly>=4.7 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from coloredlogs~=10.0->rasa_core==0.9.6) (4.17)

Requirement already satisfied, skipping upgrade: kiwisolver>=1.0.1 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from matplotlib->rasa_nlu[spacy]==0.12.0) (1.0.1)

Requirement already satisfied, skipping upgrade: cycler>=0.10 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from matplotlib->rasa_nlu[spacy]==0.12.0) (0.10.0)

Requirement already satisfied, skipping upgrade: pyparsing!=2.0.4,!=2.1.2,!=2.1.6,>=2.0.1 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from matplotlib->rasa_nlu[spacy]==0.12.0) (2.3.0)

Requirement already satisfied, skipping upgrade: greenlet>=0.4.14; platform_python_implementation == "CPython" in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from gevent->rasa_nlu[spacy]==0.12.0) (0.4.15)

Requirement already satisfied, skipping upgrade: incremental in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from klein->rasa_nlu[spacy]==0.12.0) (17.5.0)

Requirement already satisfied, skipping upgrade: Twisted>=15.5 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from klein->rasa_nlu[spacy]==0.12.0) (18.9.0)

Requirement already satisfied, skipping upgrade: botocore<1.13.0,>=1.12.82 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from boto3->rasa_nlu[spacy]==0.12.0) (1.12.82)

Requirement already satisfied, skipping upgrade: jmespath<1.0.0,>=0.7.1 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from boto3->rasa_nlu[spacy]==0.12.0) (0.9.3)

Requirement already satisfied, skipping upgrade: s3transfer<0.2.0,>=0.1.10 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from boto3->rasa_nlu[spacy]==0.12.0) (0.1.13)

Requirement already satisfied, skipping upgrade: murmurhash<1.1.0,>=0.28.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (0.28.0)

Requirement already satisfied, skipping upgrade: dill<0.3,>=0.2 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (0.2.9)

Requirement already satisfied, skipping upgrade: ujson>=1.35 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (1.35)

Collecting cymem<2.1.0,>=2.0.2 (from spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0)

Requirement already satisfied, skipping upgrade: plac<1.0.0,>=0.9.6 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (0.9.6)

Collecting thinc<6.13.0,>=6.12.1 (from spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0)

Collecting regex==2018.01.10 (from spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0)

Collecting preshed<2.1.0,>=2.0.1 (from spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0)

Requirement already satisfied, skipping upgrade: tabulate in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from sklearn-crfsuite; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (0.8.3)

Requirement already satisfied, skipping upgrade: python-crfsuite>=0.8.3 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from sklearn-crfsuite; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (0.9.6)

Requirement already satisfied, skipping upgrade: MarkupSafe>=0.23 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from Jinja2>=2.10->flask~=1.0->rasa_core==0.9.6) (1.1.0)

Requirement already satisfied, skipping upgrade: bleach==1.5.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from tensorboard<1.9.0,>=1.8.0->tensorflow<1.9,>=1.7->rasa_core==0.9.6) (1.5.0)

Requirement already satisfied, skipping upgrade: html5lib==0.9999999 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from tensorboard<1.9.0,>=1.8.0->tensorflow<1.9,>=1.7->rasa_core==0.9.6) (0.9999999)

Requirement already satisfied, skipping upgrade: markdown>=2.6.8 in /home/founder/.local/lib/python3.6/site-packages (from tensorboard<1.9.0,>=1.8.0->tensorflow<1.9,>=1.7->rasa_core==0.9.6) (3.0.1)

Requirement already satisfied, skipping upgrade: hyperlink>=17.1.1 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from Twisted>=15.5->klein->rasa_nlu[spacy]==0.12.0) (18.0.0)

Requirement already satisfied, skipping upgrade: PyHamcrest>=1.9.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from Twisted>=15.5->klein->rasa_nlu[spacy]==0.12.0) (1.9.0)

Requirement already satisfied, skipping upgrade: zope.interface>=4.4.2 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from Twisted>=15.5->klein->rasa_nlu[spacy]==0.12.0) (4.6.0)

Requirement already satisfied, skipping upgrade: Automat>=0.3.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from Twisted>=15.5->klein->rasa_nlu[spacy]==0.12.0) (0.7.0)

Requirement already satisfied, skipping upgrade: constantly>=15.1 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from Twisted>=15.5->klein->rasa_nlu[spacy]==0.12.0) (15.1.0)

Requirement already satisfied, skipping upgrade: attrs>=17.4.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from Twisted>=15.5->klein->rasa_nlu[spacy]==0.12.0) (18.2.0)

Requirement already satisfied, skipping upgrade: docutils>=0.10 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from botocore<1.13.0,>=1.12.82->boto3->rasa_nlu[spacy]==0.12.0) (0.14)

Requirement already satisfied, skipping upgrade: cytoolz<0.10,>=0.9.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from thinc<6.13.0,>=6.12.1->spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (0.9.0.1)

Requirement already satisfied, skipping upgrade: msgpack-numpy<0.4.4 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from thinc<6.13.0,>=6.12.1->spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (0.4.3.2)

Requirement already satisfied, skipping upgrade: wrapt<1.11.0,>=1.10.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from thinc<6.13.0,>=6.12.1->spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (1.10.11)

Requirement already satisfied, skipping upgrade: msgpack<0.6.0,>=0.5.6 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from thinc<6.13.0,>=6.12.1->spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (0.5.6)

Requirement already satisfied, skipping upgrade: toolz>=0.8.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (from cytoolz<0.10,>=0.9.0->thinc<6.13.0,>=6.12.1->spacy>2.0; extra == "spacy"->rasa_nlu[spacy]==0.12.0) (0.9.0)

Installing collected packages: cymem, preshed, thinc, regex, spacy, rasa-nlu, rasa-core

Found existing installation: cymem 1.31.2

Uninstalling cymem-1.31.2:

Successfully uninstalled cymem-1.31.2

Found existing installation: preshed 1.0.1

Uninstalling preshed-1.0.1:

Successfully uninstalled preshed-1.0.1

Found existing installation: thinc 6.10.3

Uninstalling thinc-6.10.3:

Successfully uninstalled thinc-6.10.3

Found existing installation: regex 2017.4.5

Uninstalling regex-2017.4.5:

Successfully uninstalled regex-2017.4.5

Successfully installed cymem-2.0.2 preshed-2.0.1 rasa-core-0.9.6 rasa-nlu-0.12.0 regex-2018.1.10 spacy-2.0.18 thinc-6.12.1

!{python} -m spacy download en_core_web_md

Requirement already satisfied: en_core_web_md==2.0.0 from https://github.com/explosion/spacy-models/releases/download/en_core_web_md-2.0.0/en_core_web_md-2.0.0.tar.gz#egg=en_core_web_md==2.0.0 in /home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages (2.0.0)

Linking successful

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/en_core_web_md

-->

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/spacy/data/en_core_web_md

You can now load the model via spacy.load('en_core_web_md')

English Language Model 을 다운로드한다.

!{python} -m spacy link en_core_web_md en --force;

Linking successful

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/en_core_web_md

-->

/home/founder/anaconda3/envs/tfKeras/lib/python3.6/site-packages/spacy/data/en

You can now load the model via spacy.load('en')

설치한 라이브러리를 가져온다.

#importing the installations

import rasa_nlu

import rasa_core

import spacy

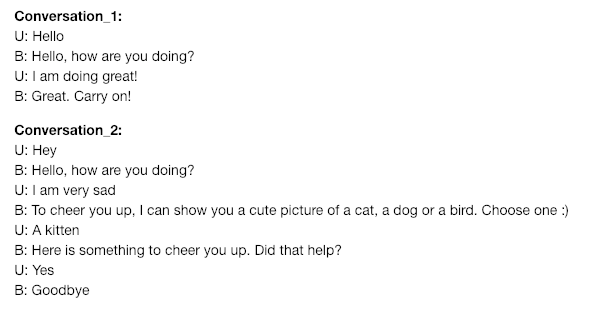

Teaching the bot to understand user inputs using Rasa NLU

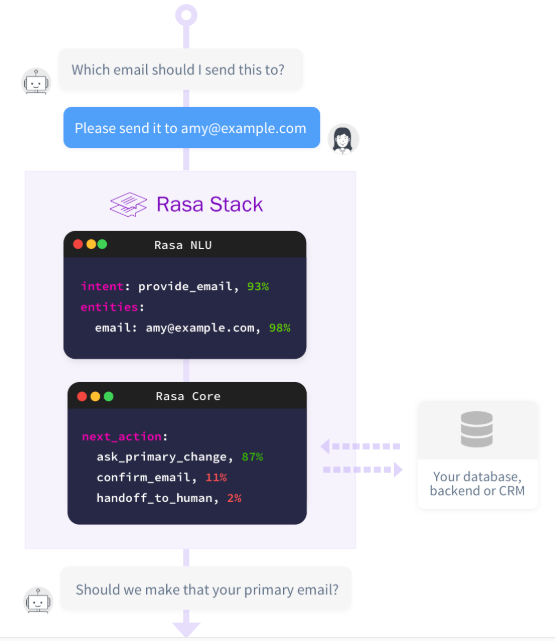

Rasa NLU 를 이용한 사용자 입력을 이해할 수 있도록 봇을 가르친다. NLU 은 봇이 사용자 입력을 어떻게 이해하는지에 대해 가르치는 부분을 담당하고 있다. 아래는 봇으로 구현하고자 하는 대화의 샘플이다.

<source> https://cdn-images-1.medium.com/max/800/1*2ZmeQffwE9JUdSz-dlLKOg.png

이를 달성하기 위해서 Rasa NLU 모델을 빌드하고 훈련용 데이터를 주입할 것이다. 그러면 모델은 그 데이터를 엔티티와 의도로 이루어진 구조화된 포맷으로 변환하게 된다.

1) Preparing the NLU Training Data

훈련용 데이터는 봇으로부터 받기를 기대하는 메시지의 리스트로 이루어져 있다. 데이터에는 Rasa NLU 가 추출하는 법을 학습해야하는 의도와 엔티티에 대해 주석이 달려있다.

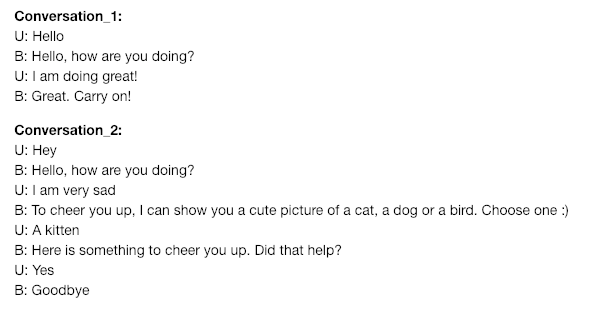

그러면 예를 통해 의도와 엔티티에 대한 개념을 이해해보자.

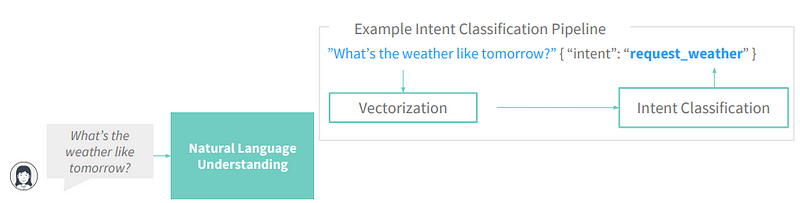

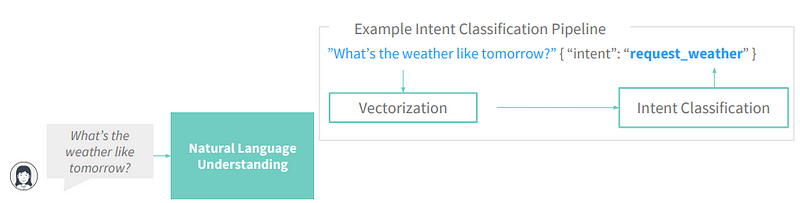

Intent: 의도라는 것은 메시지가 무엇을 말하고 있는가에 대한 것으로, 예를 들면, 날씨 예측 봇의 경우, "What’s the weather like tomorrow?” 은 request_weather 의도를 가지고 있다고 말할 수 있다.

<source> https://cdn-images-1.medium.com/max/800/1*Vxp3-_kh6jS5r5DuxsXLPw.png

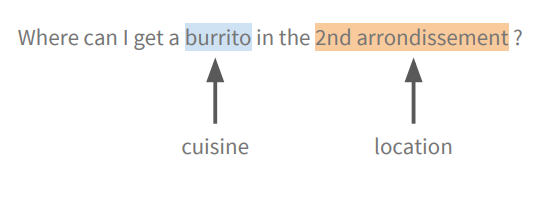

Entity: 문장의 구조화된 데이터를 분석하는 방법을 통해 챗봇으로 하여금 사용자가 특별히 무엇을 요구하고 있는지를 이해하는데 도움이 되는 정보들을 말한다. 아래에서 cuisine과 location은 모두 추출된 엔티티들이다.

<source> https://cdn-images-1.medium.com/max/800/1*84kkw5ddpVzA1IU8e5Sm4w.png

그러면 NLU 훈련용 데이터로 필요를 준비해보자. 훈련 데이터는 nlu,md 파일이라는 이름으로 노트북과 같은 위치에 저장된다. 훈련 데이터는 보통 마크다운 파일로 저장된다.

nlu_md = """

## intent:greet

- hey

- hello there

- hi

- hello there

- good morning

- good evening

- moin

- hey there

- let's go

- hey dude

- goodmorning

- goodevening

- good afternoon

## intent:goodbye

- cu

- good by

- cee you later

- good night

- good afternoon

- bye

- goodbye

- have a nice day

- see you around

- bye bye

- see you later

## intent:mood_affirm

- yes

- indeed

- of course

- that sounds good

- correct

## intent:mood_deny

- no

- never

- I don't think so

- don't like that

- no way

- not really

## intent:mood_great

- perfect

- very good

- great

- amazing

- feeling like a king

- wonderful

- I am feeling very good

- I am great

- I am amazing

- I am going to save the world

- super

- extremely good

- so so perfect

- so good

- so perfect

## intent:mood_unhappy

- my day was horrible

- I am sad

- I don't feel very well

- I am disappointed

- super sad

- I'm so sad

- sad

- very sad

- unhappy

- bad

- very bad

- awful

- terrible

- not so good

- not very good

- extremly sad

- so saad

- Quite bad - can I get a cute picture of a [bird](group:birds), please?

- Really bad and only [doggo](group:shibes) pics and change that.

- Not good. The only thing that could make me fell better is a picture of a cute [kitten](group:cats).

- so sad. Only the picture of a [puppy](group:shibes) could make it better.

- I am very sad. I need a [cat](group:cats) picture.

- Extremely sad. Only the cute [doggo](group:shibes) pics can make me feel better.

- Bad. Please show me a [bird](group:birds) pic!

- Pretty bad to be honest. Can you show me a [puppy](group:shibes) picture to make me fell better?

## intent: inform

- A [dog](group:shibes)

- [dog](group:shibes)

- [bird](group:birds)

- a [cat](group:cats)

- [cat](group:cats)

- a [bird](group:birds)

- of a [dog](group:shibes)

- of a [cat](group:cats)

- a [bird](group:birds), please

- a [dog](group:shibes), please

"""

%store nlu_md > nlu.md

Writing 'nlu_md' (str) to file 'nlu.md'.

2) Defining the NLU Model Configuration

Rasa NLU 는 서로 다른 컴포넌트로 구성된다. 일단 훈련데이터가 준비되면, NLU 모델 파이프라인으로 보낼 수 있다. 파이프라인에 리스팅된 모든 컴포넌트는 하나씩 훈련을 받게 된다. 파이프라인에 대해 더욱 자세한 내용은 여기서 확인할 수 있다.

config = """

language: "en"

pipeline:

- name: "nlp_spacy" # loads the spacy language model

- name: "tokenizer_spacy" # splits the sentence into tokens

- name: "ner_crf" # uses the pretrained spacy NER model

- name: "intent_featurizer_spacy" # transform the sentence into a vector representation

- name: "intent_classifier_sklearn" # uses the vector representation to classify using SVM

- name: "ner_synonyms" # trains the synonyms

"""

%store config > config.yml

Writing 'config' (str) to file 'config.yml'.

이 파일에는 nlu 모델에서 사용할 설정이 포함되어 있다. 이 설정파일은 모델 훈련에 있어 아주 중요한데, 그 이유는 그것이 훈련에 사용되는 몇 가지 중요한 파라메터를 제공하기 때문이다.

3) Training the NLU Model.

I예를 들어 "hello" 같은 메시지를 봇에게 보냈을 때 그러한 사용자의 입력을 이해할 수 있도록 훈련을 시켜보자. 봇은 이 메시지를 환영의 의도가 있는 것으로 파악하게 된다.

from rasa_nlu.training_data import load_data

from rasa_nlu.config import RasaNLUModelConfig

from rasa_nlu.model import Trainer

from rasa_nlu import config

# loading the nlu training samples

training_data = load_data("nlu.md")

# trainer to educate our pipeline

trainer = Trainer(config.load("config.yml"))

# train the model!

interpreter = trainer.train(training_data)

# store it for future use

model_directory = trainer.persist("./models/nlu", fixed_model_name="current")

INFO:rasa_nlu.training_data.loading:Training data format of nlu.md is md

INFO:rasa_nlu.training_data.training_data:Training data stats:

- intent examples: 85 (7 distinct intents)

- Found intents: 'greet', 'mood_unhappy', 'mood_affirm', 'mood_great', 'mood_deny', 'inform', 'goodbye'

- entity examples: 18 (1 distinct entities)

- found entities: 'group'

INFO:rasa_nlu.utils.spacy_utils:Trying to load spacy model with name 'en'

INFO:rasa_nlu.components:Added 'nlp_spacy' to component cache. Key 'nlp_spacy-en'.

INFO:rasa_nlu.model:Starting to train component nlp_spacy

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component tokenizer_spacy

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component ner_crf

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component intent_featurizer_spacy

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component intent_classifier_sklearn

[Parallel(n_jobs=1)]: Done 12 out of 12 | elapsed: 0.1s finished

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Starting to train component ner_synonyms

INFO:rasa_nlu.model:Finished training component.

INFO:rasa_nlu.model:Successfully saved model into '/home/founder/rasa/models/nlu/default/current'

Fitting 2 folds for each of 6 candidates, totalling 12 fits

이렇게 훈련된 모델은 ‘./models/nlu/current’ 라는 경로에 저장된다.

4) Evaluating the NLU model

이제 모델의 성능을 평가해보자. 랜덤하게 메시지를 전달해보자.

# A helper function for prettier output

def pprint(o):

print(json.dumps(o, indent=2))

pprint(interpreter.parse("I am very sad. Could you send me a cat picture? "))

{

"intent": {

"name": "mood_unhappy",

"confidence": 0.6683543147712094

},

"entities": [

{

"start": 35,

"end": 38,

"value": "cats",

"entity": "group",

"confidence": 0.9107588112250706,

"extractor": "ner_crf",

"processors": [

"ner_synonyms"

]

}

],

"intent_ranking": [

{

"name": "mood_unhappy",

"confidence": 0.6683543147712094

},

{

"name": "goodbye",

"confidence": 0.0920061871439525

},

{

"name": "mood_great",

"confidence": 0.08791280857762719

},

{

"name": "greet",

"confidence": 0.057737993905256246

},

{

"name": "mood_affirm",

"confidence": 0.03508093992810576

},

{

"name": "inform",

"confidence": 0.03343134770242908

},

{

"name": "mood_deny",

"confidence": 0.025476407971419392

}

],

"text": "I am very sad. Could you send me a cat picture? "

}꽤 괜찮게 작동한다. 이제 검증 데이터셋에 이를 적용해보자. 여기서는 nlu.md 데이터를 사용하지만, 사실은 아직까지 다루지 않았던 데이터를 이용하는게 좋다. 아래와 같이 실행하면 다양한 평가결과를 포함하는 Intent Confusion matrix 를 얻게 된다.

from rasa_nlu.evaluate import run_evaluation

run_evaluation("nlu.md", model_directory)

INFO:rasa_nlu.components:Added 'nlp_spacy' to component cache. Key 'nlp_spacy-en'.

INFO:rasa_nlu.training_data.loading:Training data format of nlu.md is md

INFO:rasa_nlu.training_data.training_data:Training data stats:

- intent examples: 85 (7 distinct intents)

- Found intents: 'greet', 'mood_unhappy', 'mood_affirm', 'mood_great', 'mood_deny', 'inform', 'goodbye'

- entity examples: 18 (1 distinct entities)

- found entities: 'group'

INFO:rasa_nlu.evaluate:Intent evaluation results:

INFO:rasa_nlu.evaluate:Intent Evaluation: Only considering those 85 examples that have a defined intent out of 85 examples

INFO:rasa_nlu.evaluate:F1-Score: 0.9881730469965763

INFO:rasa_nlu.evaluate:Precision: 0.9890756302521008

INFO:rasa_nlu.evaluate:Accuracy: 0.9882352941176471

INFO:rasa_nlu.evaluate:Classification report:

precision recall f1-score support

goodbye 1.00 0.91 0.95 11

greet 0.93 1.00 0.96 13

inform 1.00 1.00 1.00 10

mood_affirm 1.00 1.00 1.00 5

mood_deny 1.00 1.00 1.00 6

mood_great 1.00 1.00 1.00 15

mood_unhappy 1.00 1.00 1.00 25

avg / total 0.99 0.99 0.99 85

INFO:rasa_nlu.evaluate:Confusion matrix, without normalization:

[[10 1 0 0 0 0 0]

[ 0 13 0 0 0 0 0]

[ 0 0 10 0 0 0 0]

[ 0 0 0 5 0 0 0]

[ 0 0 0 0 6 0 0]

[ 0 0 0 0 0 15 0]

[ 0 0 0 0 0 0 25]]

INFO:rasa_nlu.evaluate:Entity evaluation results:

INFO:rasa_nlu.evaluate:Evaluation for entity extractor: ner_crf

INFO:rasa_nlu.evaluate:F1-Score: 0.9775529042760154

INFO:rasa_nlu.evaluate:Precision: 0.9780517143345049

INFO:rasa_nlu.evaluate:Accuracy: 0.9787985865724381

INFO:rasa_nlu.evaluate:Classification report:

precision recall f1-score support

group 0.93 0.72 0.81 18

no_entity 0.98 1.00 0.99 265

avg / total 0.98 0.98 0.98 283

Teaching the bot to respond using Rasa Core

이제 봇은 사용자가 말하는게 무엇인지를 이해할 수 있게 되었다. 이제 다음 단계는 봇으로 하여금 응답 메시지를 보내도록 하는 것이다. 우리의 경우에는 사용자의 기분을 업시키기 위해 개, 고양이 또는 사용자의 선택에 따라 새와 같은 이미지를 보내는 것이다. Rasa Core 를 이용한 대화 관리 모델을 훈련시켜 봇으로 하여금 응답을 하도록 해보자.

1) Writing Stories

대화 관리 모델의 훈련 데이터를 스토리라고 부른다. 스토리는 사용자와 봇 사이에 발생할 수 있는 실제 대화의 부분을 담고 있다. 사용자의 입력은 의도와 엔티티로, 그리고 챗봇의 반응은 액션으로 표현된다. 그러면 전형적인 스토리의 형태를 살펴보자.

stories_md = """

## happy path <!-- name of the story - just for debugging -->

* greet

- utter_greet

* mood_great <!-- user utterance, in format intent[entities] -->

- utter_happy

* mood_affirm

- utter_happy

* mood_affirm

- utter_goodbye

## sad path 1 <!-- this is already the start of the next story -->

* greet

- utter_greet <!-- action the bot should execute -->

* mood_unhappy

- utter_ask_picture

* inform{"animal":"dog"}

- action_retrieve_image

- utter_did_that_help

* mood_affirm

- utter_happy

## sad path 2

* greet

- utter_greet

* mood_unhappy

- utter_ask_picture

* inform{"group":"cat"}

- action_retrieve_image

- utter_did_that_help

* mood_deny

- utter_goodbye

## sad path 3

* greet

- utter_greet

* mood_unhappy{"group":"puppy"}

- action_retrieve_image

- utter_did_that_help

* mood_affirm

- utter_happy

## strange user

* mood_affirm

- utter_happy

* mood_affirm

- utter_unclear

## say goodbye

* goodbye

- utter_goodbye

## fallback

- utter_unclear

"""

%store stories_md > stories.md

Writing 'stories_md' (str) to file 'stories.md'

전형적인 스토리의 형태는 다음과 같다.

- ## - 스토리의 시작

- * 는 엔티티의 형태로 사용자에 의해 전송된 메시지

- - 는 봇에 의해 일어나는 액션

2) Defining a Domain

도메인은 작동중인 봇이 살아가는 우주라고 말할 수 있다. 여기에는 사용자 입력이 얻고자 하는 것, 예측가능한 액션에는 무엇이 있는지, 그리고 어떻게 반응하고, 무슨 정보를 저장하는지에 대한 것들이 모두 포함된다. 도메인은 주요한 5개의 부분 - intents, slots, entities, actions, templates - 으로 구성된다. 앞의 2개에 대해서는 이미 살펴보았고, 나머지에 대해 알아보자.

- slots: 봇이 대화를 추적하는게 가능하도록 하는 값에 대한 일종의 플레이스홀더

- actions: 봇의 말 또는 행위에 대한 것

- templates: 봇이 말하고자하는 것에 대한 템플릿 문자열

도메인은 domain.yml 파일 형태로 도메인을 정의할 수 있다. 다음을 보자.

domain_yml = """

intents:

- greet

- goodbye

- mood_affirm

- mood_deny

- mood_great

- mood_unhappy

- inform

slots:

group:

type: text

entities:

- group

actions:

- utter_greet

- utter_did_that_help

- utter_happy

- utter_goodbye

- utter_unclear

- utter_ask_picture

- __main__.ApiAction

templates:

utter_greet:

- text: "Hey! How are you?"

utter_did_that_help:

- text: "Did that help you?"

utter_unclear:

- text: "I am not sure what you are aiming for."

utter_happy:

- text: "Great carry on!"

utter_goodbye:

- text: "Bye"

utter_ask_picture:

- text: "To cheer you up, I can show you a cute picture of a dog, cat or a bird. Which one do you choose?"

"""

%store domain_yml > domain.yml

Writing 'domain_yml' (str) to file 'domain.yml'.

3) Custom Actions

사용자의 선택에 따라 봇이 개나 고양이, 새의 사진을 가져오는 API 콜을 만들도록 해야하기 때문에, 커스톰 액션을 만들 필요가 있다. 봇 슬롯 그룹의 값을 가져옴으로써 어떤 그림을 보여줘야하는지 봇이 알 수 있게 된다.

from rasa_core.actions import Action

from rasa_core.events import SlotSet

from IPython.core.display import Image, display

import requests

class ApiAction(Action):

def name(self):

return "action_retrieve_image"

def run(self, dispatcher, tracker, domain):

group = tracker.get_slot('group')

r = requests.get('http://shibe.online/api/{}?count=1&urls=true&httpsUrls=true'.format(group))

response = r.content.decode()

response = response.replace('["',"")

response = response.replace('"]',"")

#display(Image(response[0], height=550, width=520))

dispatcher.utter_message("Here is something to cheer you up: {}".format(response))

4) Visualising the Training Data

!sudo apt-get -qq install -y graphviz libgraphviz-dev pkg-config;

!breq install graphviz

!{python} -m pip install pygraphviz;

Selecting previously unselected package libxdot4.

(Reading database ... 196425 files and directories currently installed.)

Preparing to unpack .../libxdot4_2.40.1-2_amd64.deb ...

Unpacking libxdot4 (2.40.1-2) ...

Selecting previously unselected package libgvc6-plugins-gtk.

Preparing to unpack .../libgvc6-plugins-gtk_2.40.1-2_amd64.deb ...

Unpacking libgvc6-plugins-gtk (2.40.1-2) ...

Selecting previously unselected package libgraphviz-dev.

Preparing to unpack .../libgraphviz-dev_2.40.1-2_amd64.deb ...

Unpacking libgraphviz-dev (2.40.1-2) ...

Setting up libxdot4 (2.40.1-2) ...

Setting up libgvc6-plugins-gtk (2.40.1-2) ...

Setting up libgraphviz-dev (2.40.1-2) ...

Processing triggers for libc-bin (2.27-3ubuntu1) ...

Processing triggers for man-db (2.8.3-2) ...

/bin/sh: 1: breq: not found

Collecting pygraphviz

Using cached https://files.pythonhosted.org/packages/7e/b1/d6d849ddaf6f11036f9980d433f383d4c13d1ebcfc3cd09bc845bda7e433/pygraphviz-1.5.zip

Building wheels for collected packages: pygraphviz

Running setup.py bdist_wheel for pygraphviz ... done

Stored in directory: /home/founder/.cache/pip/wheels/65/54/69/1aee9e66ab19916293208d4c9de0d3898adebe6b2eeff6476b

Successfully built pygraphviz

Installing collected packages: pygraphviz

Successfully installed pygraphviz-1.5

from IPython.display import Image

from rasa_core.agent import Agent

agent = Agent('domain.yml')

agent.visualize("stories.md", "story_graph.png", max_history=2)

Image(filename="story_graph.png")

INFO:apscheduler.scheduler:Scheduler started

Processed Story Blocks: 100%|██████████| 7/7 [00:00<00:00, 657.90it/s, # trackers=1]

5) Training a Dialogue Model

마지막으로 정책을 포함하고 있는 대화 관리 모델을 훈련시켜보자. 본 튜토리얼에서는 케라스로 구현을 하게 된다. 모델의 주요 컴포넌트는 recurrent neural network (an LSTM)이다.

from rasa_core.policies import FallbackPolicy, KerasPolicy, MemoizationPolicy

from rasa_core.agent import Agent

# this will catch predictions the model isn't very certain about

# there is a threshold for the NLU predictions as well as the action predictions

fallback = FallbackPolicy(fallback_action_name="utter_unclear",

core_threshold=0.2,

nlu_threshold=0.1)

agent = Agent('domain.yml', policies=[MemoizationPolicy(), KerasPolicy(), fallback])

# loading our neatly defined training dialogues

training_data = agent.load_data('stories.md')

agent.train(

training_data,

validation_split=0.0,

epochs=200

)

agent.persist('models/dialogue')

Using TensorFlow backend.

Processed Story Blocks: 100%|██████████| 7/7 [00:00<00:00, 597.92it/s, # trackers=1]

Processed Story Blocks: 100%|██████████| 7/7 [00:00<00:00, 364.70it/s, # trackers=7]

Processed Story Blocks: 100%|██████████| 7/7 [00:00<00:00, 257.86it/s, # trackers=13]

Processed Story Blocks: 100%|██████████| 7/7 [00:00<00:00, 251.92it/s, # trackers=10]

INFO:rasa_core.featurizers:Creating states and action examples from collected trackers (by MaxHistoryTrackerFeaturizer)...

Processed trackers: 100%|██████████| 206/206 [00:03<00:00, 60.83it/s, # actions=248]

INFO:rasa_core.featurizers:Created 248 action examples.

Processed actions: 248it [00:00, 759.24it/s, # examples=248]

INFO:rasa_core.policies.memoization:Memorized 248 unique action examples.

INFO:rasa_core.featurizers:Creating states and action examples from collected trackers (by MaxHistoryTrackerFeaturizer)...

Processed trackers: 100%|██████████| 206/206 [00:02<00:00, 61.71it/s, # actions=248]

INFO:rasa_core.featurizers:Created 248 action examples.

INFO:rasa_core.policies.keras_policy:Fitting model with 248 total samples and a validation split of 0.0

그리고 “Sorry, I didn’t understand that” 과 같은 대비책도 마련해놔야 한다. 이를 위해서는 정책 앙상블에 FallbackPolicy 를 추가한다.

최적화된 케라스 정책 모델은 다음과 같다.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

masking_1 (Masking) (None, 5, 18) 0

_________________________________________________________________

lstm_1 (LSTM) (None, 32) 6528

_________________________________________________________________

dense_1 (Dense) (None, 9) 297

_________________________________________________________________

activation_1 (Activation) (None, 9) 0

=================================================================

Total params: 6,825

Trainable params: 6,825

Non-trainable params: 0

_________________________________________________________________

모델은 ‘models/dialogue’ 경로에 저장된다.

Epoch 1/200

248/248 [==============================] - 1s 3ms/step - loss: 2.1964 - acc: 0.1573

Epoch 2/200

248/248 [==============================] - 0s 191us/step - loss: 2.1178 - acc: 0.3992

Epoch 3/200

248/248 [==============================] - 0s 179us/step - loss: 2.0545 - acc: 0.4677

Epoch 4/200

248/248 [==============================] - 0s 184us/step - loss: 1.9949 - acc: 0.4718

Epoch 5/200

248/248 [==============================] - 0s 211us/step - loss: 1.9206 - acc: 0.4718

Epoch 6/200

248/248 [==============================] - 0s 199us/step - loss: 1.8613 - acc: 0.4677

Epoch 7/200

248/248 [==============================] - 0s 204us/step - loss: 1.7997 - acc: 0.4677

Epoch 8/200

248/248 [==============================] - 0s 193us/step - loss: 1.7418 - acc: 0.4677

Epoch 9/200

248/248 [==============================] - 0s 179us/step - loss: 1.7043 - acc: 0.4677

Epoch 10/200

248/248 [==============================] - 0s 182us/step - loss: 1.6838 - acc: 0.4677

Epoch 11/200

248/248 [==============================] - 0s 182us/step - loss: 1.6445 - acc: 0.4677

Epoch 12/200

248/248 [==============================] - 0s 184us/step - loss: 1.6238 - acc: 0.4677

Epoch 13/200

248/248 [==============================] - 0s 203us/step - loss: 1.5891 - acc: 0.4677

Epoch 14/200

248/248 [==============================] - 0s 179us/step - loss: 1.5670 - acc: 0.4677

Epoch 15/200

248/248 [==============================] - 0s 194us/step - loss: 1.5419 - acc: 0.4677

Epoch 16/200

248/248 [==============================] - 0s 207us/step - loss: 1.5013 - acc: 0.4677

Epoch 17/200

248/248 [==============================] - 0s 194us/step - loss: 1.4888 - acc: 0.4677

Epoch 18/200

248/248 [==============================] - 0s 197us/step - loss: 1.4496 - acc: 0.4677

Epoch 19/200

248/248 [==============================] - 0s 195us/step - loss: 1.4264 - acc: 0.4677

Epoch 20/200

248/248 [==============================] - 0s 179us/step - loss: 1.3835 - acc: 0.4798

Epoch 21/200

248/248 [==============================] - 0s 193us/step - loss: 1.3665 - acc: 0.4677

Epoch 22/200

248/248 [==============================] - 0s 219us/step - loss: 1.3229 - acc: 0.4758

Epoch 23/200

248/248 [==============================] - 0s 184us/step - loss: 1.2707 - acc: 0.5363

Epoch 24/200

248/248 [==============================] - 0s 186us/step - loss: 1.2414 - acc: 0.5161

Epoch 25/200

248/248 [==============================] - 0s 213us/step - loss: 1.1796 - acc: 0.5565

Epoch 26/200

248/248 [==============================] - 0s 191us/step - loss: 1.1602 - acc: 0.5766

Epoch 27/200

248/248 [==============================] - 0s 190us/step - loss: 1.0962 - acc: 0.6411

Epoch 28/200

248/248 [==============================] - 0s 189us/step - loss: 1.0891 - acc: 0.6250

Epoch 29/200

248/248 [==============================] - 0s 179us/step - loss: 1.0696 - acc: 0.6411

Epoch 30/200

248/248 [==============================] - 0s 186us/step - loss: 0.9878 - acc: 0.6774

Epoch 31/200

248/248 [==============================] - 0s 183us/step - loss: 0.9294 - acc: 0.6855

Epoch 32/200

248/248 [==============================] - 0s 188us/step - loss: 0.8899 - acc: 0.7137

Epoch 33/200

248/248 [==============================] - 0s 191us/step - loss: 0.8980 - acc: 0.6774

Epoch 34/200

248/248 [==============================] - 0s 179us/step - loss: 0.8649 - acc: 0.7258

Epoch 35/200

248/248 [==============================] - 0s 186us/step - loss: 0.8284 - acc: 0.7097

Epoch 36/200

248/248 [==============================] - 0s 182us/step - loss: 0.8008 - acc: 0.7460

Epoch 37/200

248/248 [==============================] - 0s 182us/step - loss: 0.7445 - acc: 0.7581

Epoch 38/200

248/248 [==============================] - 0s 191us/step - loss: 0.7340 - acc: 0.7661

Epoch 39/200

248/248 [==============================] - 0s 188us/step - loss: 0.7528 - acc: 0.7500

Epoch 40/200

248/248 [==============================] - 0s 190us/step - loss: 0.7061 - acc: 0.7581

Epoch 41/200

248/248 [==============================] - 0s 193us/step - loss: 0.6745 - acc: 0.7984

Epoch 42/200

248/248 [==============================] - 0s 182us/step - loss: 0.6599 - acc: 0.8065

Epoch 43/200

248/248 [==============================] - 0s 181us/step - loss: 0.6584 - acc: 0.7984

Epoch 44/200

248/248 [==============================] - 0s 186us/step - loss: 0.6315 - acc: 0.8306

Epoch 45/200

248/248 [==============================] - 0s 186us/step - loss: 0.6455 - acc: 0.7782

Epoch 46/200

248/248 [==============================] - 0s 239us/step - loss: 0.6177 - acc: 0.8387

Epoch 47/200

248/248 [==============================] - 0s 197us/step - loss: 0.6025 - acc: 0.8387

Epoch 48/200

248/248 [==============================] - 0s 246us/step - loss: 0.5874 - acc: 0.8629

Epoch 49/200

248/248 [==============================] - 0s 190us/step - loss: 0.5522 - acc: 0.8871

Epoch 50/200

248/248 [==============================] - 0s 192us/step - loss: 0.5401 - acc: 0.8710

Epoch 51/200

248/248 [==============================] - 0s 178us/step - loss: 0.5599 - acc: 0.8548

Epoch 52/200

248/248 [==============================] - 0s 183us/step - loss: 0.4985 - acc: 0.8911

Epoch 53/200

248/248 [==============================] - 0s 194us/step - loss: 0.5098 - acc: 0.8790

Epoch 54/200

248/248 [==============================] - 0s 183us/step - loss: 0.5042 - acc: 0.8911

Epoch 55/200

248/248 [==============================] - 0s 182us/step - loss: 0.4659 - acc: 0.8911

Epoch 56/200

248/248 [==============================] - 0s 182us/step - loss: 0.4699 - acc: 0.8831

Epoch 57/200

248/248 [==============================] - 0s 190us/step - loss: 0.4587 - acc: 0.9153

Epoch 58/200

248/248 [==============================] - 0s 183us/step - loss: 0.4658 - acc: 0.9032

Epoch 59/200

248/248 [==============================] - 0s 187us/step - loss: 0.4079 - acc: 0.9234

Epoch 60/200

248/248 [==============================] - 0s 193us/step - loss: 0.4340 - acc: 0.9113

Epoch 61/200

248/248 [==============================] - 0s 195us/step - loss: 0.4236 - acc: 0.9113

Epoch 62/200

248/248 [==============================] - 0s 200us/step - loss: 0.4023 - acc: 0.9194

Epoch 63/200

248/248 [==============================] - 0s 223us/step - loss: 0.3834 - acc: 0.9315

Epoch 64/200

248/248 [==============================] - 0s 181us/step - loss: 0.3777 - acc: 0.9355

Epoch 65/200

248/248 [==============================] - 0s 190us/step - loss: 0.3531 - acc: 0.9435

Epoch 66/200

248/248 [==============================] - 0s 232us/step - loss: 0.3770 - acc: 0.9395

Epoch 67/200

248/248 [==============================] - 0s 200us/step - loss: 0.3417 - acc: 0.9355

Epoch 68/200

248/248 [==============================] - 0s 190us/step - loss: 0.3284 - acc: 0.9516

Epoch 69/200

248/248 [==============================] - 0s 184us/step - loss: 0.3285 - acc: 0.9476

Epoch 70/200

248/248 [==============================] - 0s 192us/step - loss: 0.3546 - acc: 0.9194

Epoch 71/200

248/248 [==============================] - 0s 185us/step - loss: 0.3384 - acc: 0.9274

Epoch 72/200

248/248 [==============================] - 0s 181us/step - loss: 0.2976 - acc: 0.9556

Epoch 73/200

248/248 [==============================] - 0s 197us/step - loss: 0.3297 - acc: 0.9315

Epoch 74/200

248/248 [==============================] - 0s 187us/step - loss: 0.3007 - acc: 0.9435

Epoch 75/200

248/248 [==============================] - 0s 189us/step - loss: 0.3187 - acc: 0.9194

Epoch 76/200

248/248 [==============================] - 0s 192us/step - loss: 0.2989 - acc: 0.9395

Epoch 77/200

248/248 [==============================] - 0s 181us/step - loss: 0.3354 - acc: 0.9153

Epoch 78/200

248/248 [==============================] - 0s 173us/step - loss: 0.2916 - acc: 0.9435

Epoch 79/200

248/248 [==============================] - 0s 191us/step - loss: 0.2907 - acc: 0.9315

Epoch 80/200

248/248 [==============================] - 0s 220us/step - loss: 0.2697 - acc: 0.9355

Epoch 81/200

248/248 [==============================] - 0s 182us/step - loss: 0.2429 - acc: 0.9637

Epoch 82/200

248/248 [==============================] - 0s 198us/step - loss: 0.2485 - acc: 0.9556

Epoch 83/200

248/248 [==============================] - 0s 196us/step - loss: 0.2425 - acc: 0.9516

Epoch 84/200

248/248 [==============================] - 0s 197us/step - loss: 0.2533 - acc: 0.9476

Epoch 85/200

248/248 [==============================] - 0s 193us/step - loss: 0.2639 - acc: 0.9355

Epoch 86/200

248/248 [==============================] - 0s 201us/step - loss: 0.2543 - acc: 0.9355

Epoch 87/200

248/248 [==============================] - 0s 213us/step - loss: 0.2274 - acc: 0.9476

Epoch 88/200

248/248 [==============================] - 0s 190us/step - loss: 0.1907 - acc: 0.9758

Epoch 89/200

248/248 [==============================] - 0s 199us/step - loss: 0.2290 - acc: 0.9435

Epoch 90/200

248/248 [==============================] - 0s 220us/step - loss: 0.2408 - acc: 0.9597

Epoch 91/200

248/248 [==============================] - 0s 185us/step - loss: 0.1973 - acc: 0.9637

Epoch 92/200

248/248 [==============================] - 0s 199us/step - loss: 0.1618 - acc: 0.9879

Epoch 93/200

248/248 [==============================] - 0s 196us/step - loss: 0.2006 - acc: 0.9556

Epoch 94/200

248/248 [==============================] - 0s 197us/step - loss: 0.2229 - acc: 0.9435

Epoch 95/200

248/248 [==============================] - 0s 188us/step - loss: 0.1920 - acc: 0.9597

Epoch 96/200

248/248 [==============================] - 0s 197us/step - loss: 0.1915 - acc: 0.9556

Epoch 97/200

248/248 [==============================] - 0s 191us/step - loss: 0.1850 - acc: 0.9556

Epoch 98/200

248/248 [==============================] - 0s 198us/step - loss: 0.1952 - acc: 0.9637

Epoch 99/200

248/248 [==============================] - 0s 197us/step - loss: 0.1769 - acc: 0.9476

Epoch 100/200

248/248 [==============================] - 0s 185us/step - loss: 0.1715 - acc: 0.9637

Epoch 101/200

248/248 [==============================] - 0s 195us/step - loss: 0.1794 - acc: 0.9677

Epoch 102/200

248/248 [==============================] - 0s 184us/step - loss: 0.1975 - acc: 0.9556

Epoch 103/200

248/248 [==============================] - 0s 242us/step - loss: 0.1823 - acc: 0.9597

Epoch 104/200

248/248 [==============================] - 0s 194us/step - loss: 0.1587 - acc: 0.9677

Epoch 105/200

248/248 [==============================] - 0s 186us/step - loss: 0.1517 - acc: 0.9758

Epoch 106/200

248/248 [==============================] - 0s 199us/step - loss: 0.1768 - acc: 0.9476

Epoch 107/200

248/248 [==============================] - 0s 210us/step - loss: 0.1812 - acc: 0.9476

Epoch 108/200

248/248 [==============================] - 0s 173us/step - loss: 0.1626 - acc: 0.9597

Epoch 109/200

248/248 [==============================] - 0s 195us/step - loss: 0.1519 - acc: 0.9758

Epoch 110/200

248/248 [==============================] - 0s 202us/step - loss: 0.1378 - acc: 0.9677

Epoch 111/200

248/248 [==============================] - 0s 187us/step - loss: 0.1638 - acc: 0.9556

Epoch 112/200

248/248 [==============================] - 0s 210us/step - loss: 0.1680 - acc: 0.9597

Epoch 113/200

248/248 [==============================] - 0s 198us/step - loss: 0.1362 - acc: 0.9677

Epoch 114/200

248/248 [==============================] - 0s 201us/step - loss: 0.1515 - acc: 0.9556

Epoch 115/200

248/248 [==============================] - 0s 189us/step - loss: 0.1467 - acc: 0.9597

Epoch 116/200

248/248 [==============================] - 0s 196us/step - loss: 0.1282 - acc: 0.9758

Epoch 117/200

248/248 [==============================] - 0s 199us/step - loss: 0.1530 - acc: 0.9556

Epoch 118/200

248/248 [==============================] - 0s 188us/step - loss: 0.1533 - acc: 0.9677

Epoch 119/200

248/248 [==============================] - 0s 192us/step - loss: 0.1202 - acc: 0.9677

Epoch 120/200

248/248 [==============================] - 0s 253us/step - loss: 0.1785 - acc: 0.9516

Epoch 121/200

248/248 [==============================] - 0s 192us/step - loss: 0.1232 - acc: 0.9798

Epoch 122/200

248/248 [==============================] - 0s 186us/step - loss: 0.1221 - acc: 0.9839

Epoch 123/200

248/248 [==============================] - 0s 186us/step - loss: 0.1598 - acc: 0.9516

Epoch 124/200

248/248 [==============================] - 0s 193us/step - loss: 0.1398 - acc: 0.9637

Epoch 125/200

248/248 [==============================] - 0s 201us/step - loss: 0.1502 - acc: 0.9556

Epoch 126/200

248/248 [==============================] - 0s 209us/step - loss: 0.1334 - acc: 0.9839

Epoch 127/200

248/248 [==============================] - 0s 204us/step - loss: 0.1354 - acc: 0.9556

Epoch 128/200

248/248 [==============================] - 0s 197us/step - loss: 0.1261 - acc: 0.9758

Epoch 129/200

248/248 [==============================] - 0s 201us/step - loss: 0.1005 - acc: 0.9798

Epoch 130/200

248/248 [==============================] - 0s 193us/step - loss: 0.0984 - acc: 0.9839

Epoch 131/200

248/248 [==============================] - 0s 190us/step - loss: 0.1070 - acc: 0.9758

Epoch 132/200

248/248 [==============================] - 0s 233us/step - loss: 0.1385 - acc: 0.9718

Epoch 133/200

248/248 [==============================] - 0s 197us/step - loss: 0.0894 - acc: 0.9879

Epoch 134/200

248/248 [==============================] - 0s 205us/step - loss: 0.0867 - acc: 0.9919

Epoch 135/200

248/248 [==============================] - 0s 187us/step - loss: 0.1365 - acc: 0.9556

Epoch 136/200

248/248 [==============================] - 0s 193us/step - loss: 0.1151 - acc: 0.9597

Epoch 137/200

248/248 [==============================] - 0s 199us/step - loss: 0.0994 - acc: 0.9879

Epoch 138/200

248/248 [==============================] - 0s 185us/step - loss: 0.1113 - acc: 0.9677

Epoch 139/200

248/248 [==============================] - 0s 194us/step - loss: 0.0904 - acc: 0.9879

Epoch 140/200

248/248 [==============================] - 0s 189us/step - loss: 0.0883 - acc: 0.9839

Epoch 141/200

248/248 [==============================] - 0s 187us/step - loss: 0.0936 - acc: 0.9839

Epoch 142/200

248/248 [==============================] - 0s 180us/step - loss: 0.1322 - acc: 0.9597

Epoch 143/200

248/248 [==============================] - 0s 221us/step - loss: 0.0907 - acc: 0.9839

Epoch 144/200

248/248 [==============================] - 0s 198us/step - loss: 0.1022 - acc: 0.9758

Epoch 145/200

248/248 [==============================] - 0s 209us/step - loss: 0.0993 - acc: 0.9758

Epoch 146/200

248/248 [==============================] - 0s 196us/step - loss: 0.0886 - acc: 0.9758

Epoch 147/200

248/248 [==============================] - 0s 214us/step - loss: 0.0925 - acc: 0.9919

Epoch 148/200

248/248 [==============================] - 0s 194us/step - loss: 0.0955 - acc: 0.9718

Epoch 149/200

248/248 [==============================] - 0s 186us/step - loss: 0.0880 - acc: 0.9839

Epoch 150/200

248/248 [==============================] - 0s 188us/step - loss: 0.0973 - acc: 0.9758

Epoch 151/200

248/248 [==============================] - 0s 173us/step - loss: 0.0870 - acc: 0.9798

Epoch 152/200

248/248 [==============================] - 0s 170us/step - loss: 0.0849 - acc: 0.9919

Epoch 153/200

248/248 [==============================] - 0s 187us/step - loss: 0.0801 - acc: 0.9758

Epoch 154/200

248/248 [==============================] - 0s 182us/step - loss: 0.0866 - acc: 0.9798

Epoch 155/200

248/248 [==============================] - 0s 194us/step - loss: 0.1014 - acc: 0.9758

Epoch 156/200

248/248 [==============================] - 0s 204us/step - loss: 0.0687 - acc: 0.9839

Epoch 157/200

248/248 [==============================] - 0s 214us/step - loss: 0.1113 - acc: 0.9637

Epoch 158/200

248/248 [==============================] - 0s 208us/step - loss: 0.0777 - acc: 0.9718

Epoch 159/200

248/248 [==============================] - 0s 221us/step - loss: 0.0838 - acc: 0.9919

Epoch 160/200

248/248 [==============================] - 0s 187us/step - loss: 0.0893 - acc: 0.9798

Epoch 161/200

248/248 [==============================] - 0s 202us/step - loss: 0.0813 - acc: 0.9758

Epoch 162/200

248/248 [==============================] - 0s 201us/step - loss: 0.0812 - acc: 0.9879

Epoch 163/200

248/248 [==============================] - 0s 195us/step - loss: 0.0950 - acc: 0.9677

Epoch 164/200

248/248 [==============================] - 0s 198us/step - loss: 0.0636 - acc: 0.9960

Epoch 165/200

248/248 [==============================] - 0s 197us/step - loss: 0.0671 - acc: 0.9919

Epoch 166/200

248/248 [==============================] - 0s 196us/step - loss: 0.0726 - acc: 0.9879

Epoch 167/200

248/248 [==============================] - 0s 221us/step - loss: 0.0601 - acc: 0.9919

Epoch 168/200

248/248 [==============================] - 0s 196us/step - loss: 0.0594 - acc: 0.9919

Epoch 169/200

248/248 [==============================] - 0s 191us/step - loss: 0.0357 - acc: 0.9960

Epoch 170/200

248/248 [==============================] - 0s 185us/step - loss: 0.0671 - acc: 0.9879

Epoch 171/200

248/248 [==============================] - 0s 193us/step - loss: 0.0724 - acc: 0.9879

Epoch 172/200

248/248 [==============================] - 0s 189us/step - loss: 0.0749 - acc: 0.9758

Epoch 173/200

248/248 [==============================] - 0s 174us/step - loss: 0.1039 - acc: 0.9758

Epoch 174/200

248/248 [==============================] - 0s 183us/step - loss: 0.0533 - acc: 0.9919

Epoch 175/200

248/248 [==============================] - 0s 198us/step - loss: 0.0779 - acc: 0.9839

Epoch 176/200

248/248 [==============================] - 0s 188us/step - loss: 0.0625 - acc: 0.9798

Epoch 177/200

248/248 [==============================] - 0s 196us/step - loss: 0.0596 - acc: 0.9919

Epoch 178/200

248/248 [==============================] - 0s 199us/step - loss: 0.0544 - acc: 0.9960

Epoch 179/200

248/248 [==============================] - 0s 203us/step - loss: 0.0669 - acc: 0.9758

Epoch 180/200

248/248 [==============================] - 0s 193us/step - loss: 0.0570 - acc: 0.9919

Epoch 181/200

248/248 [==============================] - 0s 195us/step - loss: 0.0577 - acc: 0.9919

Epoch 182/200

248/248 [==============================] - 0s 189us/step - loss: 0.0572 - acc: 0.9798

Epoch 183/200

248/248 [==============================] - 0s 227us/step - loss: 0.0516 - acc: 0.9919

Epoch 184/200

248/248 [==============================] - 0s 183us/step - loss: 0.0727 - acc: 0.9839

Epoch 185/200

248/248 [==============================] - 0s 211us/step - loss: 0.0529 - acc: 0.9960

Epoch 186/200

248/248 [==============================] - 0s 201us/step - loss: 0.0665 - acc: 0.9798

Epoch 187/200

248/248 [==============================] - 0s 199us/step - loss: 0.0929 - acc: 0.9758

Epoch 188/200

248/248 [==============================] - 0s 212us/step - loss: 0.0659 - acc: 0.9919

Epoch 189/200

248/248 [==============================] - 0s 187us/step - loss: 0.0578 - acc: 0.9839

Epoch 190/200

248/248 [==============================] - 0s 190us/step - loss: 0.0577 - acc: 0.9879

Epoch 191/200

248/248 [==============================] - 0s 175us/step - loss: 0.0674 - acc: 0.9798

Epoch 192/200

248/248 [==============================] - 0s 203us/step - loss: 0.0654 - acc: 0.9839

Epoch 193/200

248/248 [==============================] - 0s 197us/step - loss: 0.0459 - acc: 0.9960

Epoch 194/200

248/248 [==============================] - 0s 186us/step - loss: 0.0693 - acc: 0.9798

Epoch 195/200

248/248 [==============================] - 0s 192us/step - loss: 0.0459 - acc: 0.9879

Epoch 196/200

248/248 [==============================] - 0s 195us/step - loss: 0.0617 - acc: 0.9839

Epoch 197/200

248/248 [==============================] - 0s 204us/step - loss: 0.0650 - acc: 0.9879

Epoch 198/200

248/248 [==============================] - 0s 198us/step - loss: 0.0723 - acc: 0.9798

Epoch 199/200

248/248 [==============================] - 0s 209us/step - loss: 0.0526 - acc: 0.9879

Epoch 200/200

248/248 [==============================] - 0s 189us/step - loss: 0.0593 - acc: 0.9919

INFO:rasa_core.policies.keras_policy:Done fitting keras policy model

INFO:rasa_core.agent:Persisted model to '/home/founder/rasa/models/dialogue'

5) Time to chat

다음 코드를 실행하여 봇과 채팅을 진행해보자.

#Starting the Bot

from rasa_core.agent import Agent

agent = Agent.load('models/dialogue', interpreter=model_directory)

INFO:rasa_nlu.components:Added 'nlp_spacy' to component cache. Key 'nlp_spacy-en'.

print("Your bot is ready to talk! Type your messages here or send 'stop'")

while True:

a = input()

if a == 'stop':

break

responses = agent.handle_message(a)

for response in responses:

print(response["text"])

Your bot is ready to talk! Type your messages here or send 'stop'

hello

Hey! How are you?

fine

To cheer you up, I can show you a cute picture of a dog, cat or a bird. Which one do you choose?

dog

Here is something to cheer you up: Invalid endpoint.

Did that help you?

yes

Great carry on!

thank you

Bye

stop

이것으로 사용자의 입력을 받아 문맥적으로 적절한 반응을 내놓는 채팅 봇을 만들어보았다. 다음 포스팅에서는 Slack 을 통해 이를 구현해본다.

[원문] https://towardsdatascience.com/building-a-conversational-chatbot-for-slack-using-rasa-and-python-part-1-bca5cc75d32f